In just a few years, large language models (LLMs) have evolved from text autocomplete engines into agents that can reason, use tools, and perform autonomous decision-making.

This progression, from the early neural networks of the 1960s to today's multi-agent systems, marks a profound shift toward machines that not only process information but also "think" and interact autonomously.

This post traces the key breakthroughs that led to this transformation, and explores where the path of intelligent, self-directed AI might take us next.

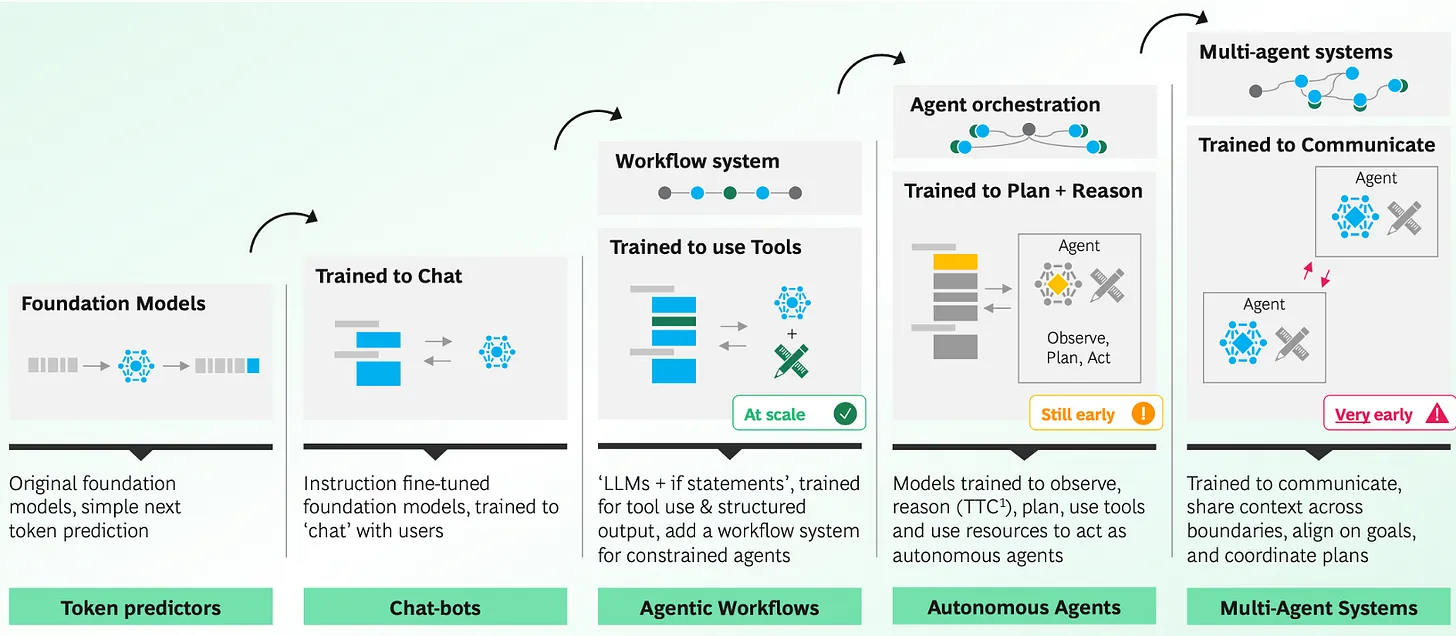

Timeline leading to Multi-Agent Systems

Source: AI Agents, and the Model Context Protocol, BCG

Source: AI Agents, and the Model Context Protocol, BCG

The diagram above and timeline below show the rise of neural networks, deep learning, and transformers to the emergence of LLM agents which are the foundation for multi-agent systems.

1940s - 2010s: Neural Network Foundations

The foundational period during which core neural network techniques were developed, enabling early AI applications.

1943: McCulloch & Pitts show artificial neurons can simulate a Turing machine

1958: Rosenblatt develops the first trainable neural network model

1986: Backpropagation popularised, enabling multi-layer neural networks

1990s: Neural networks applied to speech, vision, and character recognition

2010 - 2020: Deep Learning, Transformers, LLMs

A transformative decade marked by breakthroughs in deep learning and transformer models, dramatically improving AI's capabilities.

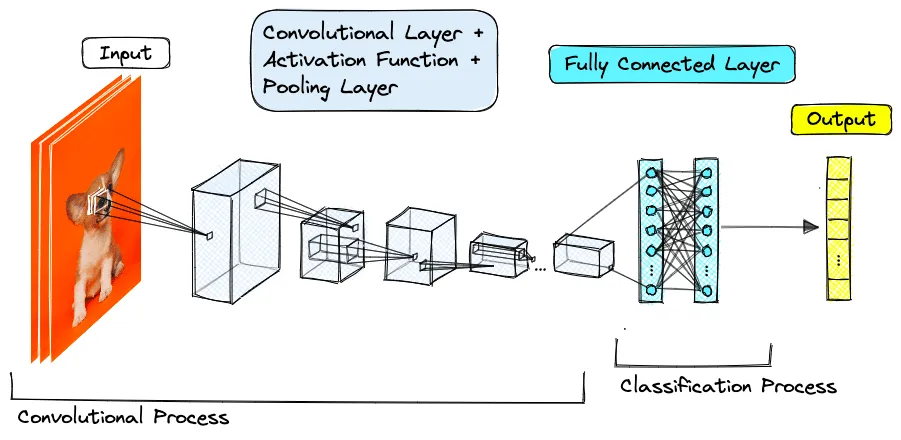

2012: AlexNet wins ImageNet visual recognition competition, igniting the deep learning revolution

2010s: GPU acceleration, big data, and deep learning sparks the current AI boom

2017: Attention Is All You Need paper introduces the Transformer architecture enabling parallelism and paving the way for modern LLMs

2018: BERT model enables bidirectional language understanding and popularises fine-tuning for downstream NLP tasks

2019: GPT-2 released (1.5B params), first foundation model showing emergent language capabilities

2020: GPT-3 released (175B params), a landmark model showing that scaling produces strong zero-shot performance

Example convolutional network. Source: pinecone.io

Example convolutional network. Source: pinecone.io

2021 - 2025: Reasoning, Tool Calling and Agents

Recent innovations enabling sophisticated reasoning, autonomous tool usage, and the rise of collaborative multi-agent systems.

2022: Chain-of-thought and ReAct prompt methods enhance LLM reasoning

2022: ChatGPT launched; instruction-following with RLHF becomes widespread

2023: ToolFormer teaches LLMs to invoke tools autonomously

2023: GPT-4 and Gemini v1 add multimodal text+image capabilities

2023: LangChain, AutoGPT, and other orchestration frameworks rise

2024: Structured JSON outputs well supported by GPT-4 and others

2025: Agent ecosystem grows (frameworks, protocols, tools)

Today: Awaiting GPT-5!

The Future

As LLM agents gain memory, planning, and tool use, a growing number of researchers are exploring where this leads. Here are three glimpses of what may come next:

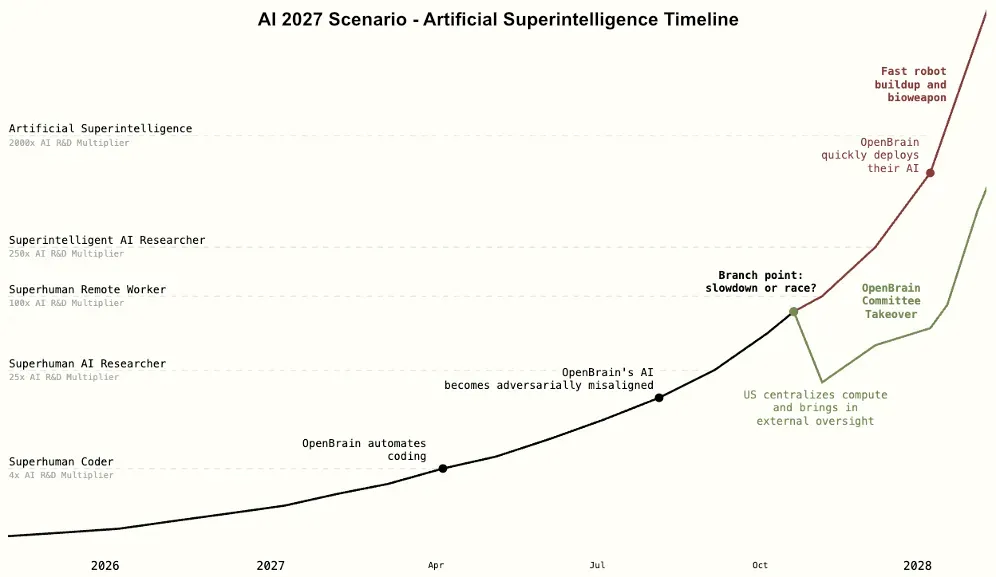

AI 2027 scenario to superhuman AI

The AI 2027 scenario is a detailed, narrative-based forecast by the nonprofit AI Futures Project. They predict, with a timeline, that the impact of superhuman AI over the next decade will be enormous, exceeding that of the Industrial Revolution. Time will tell if this is true.

Source: AI 2027 scenario

Source: AI 2027 scenario

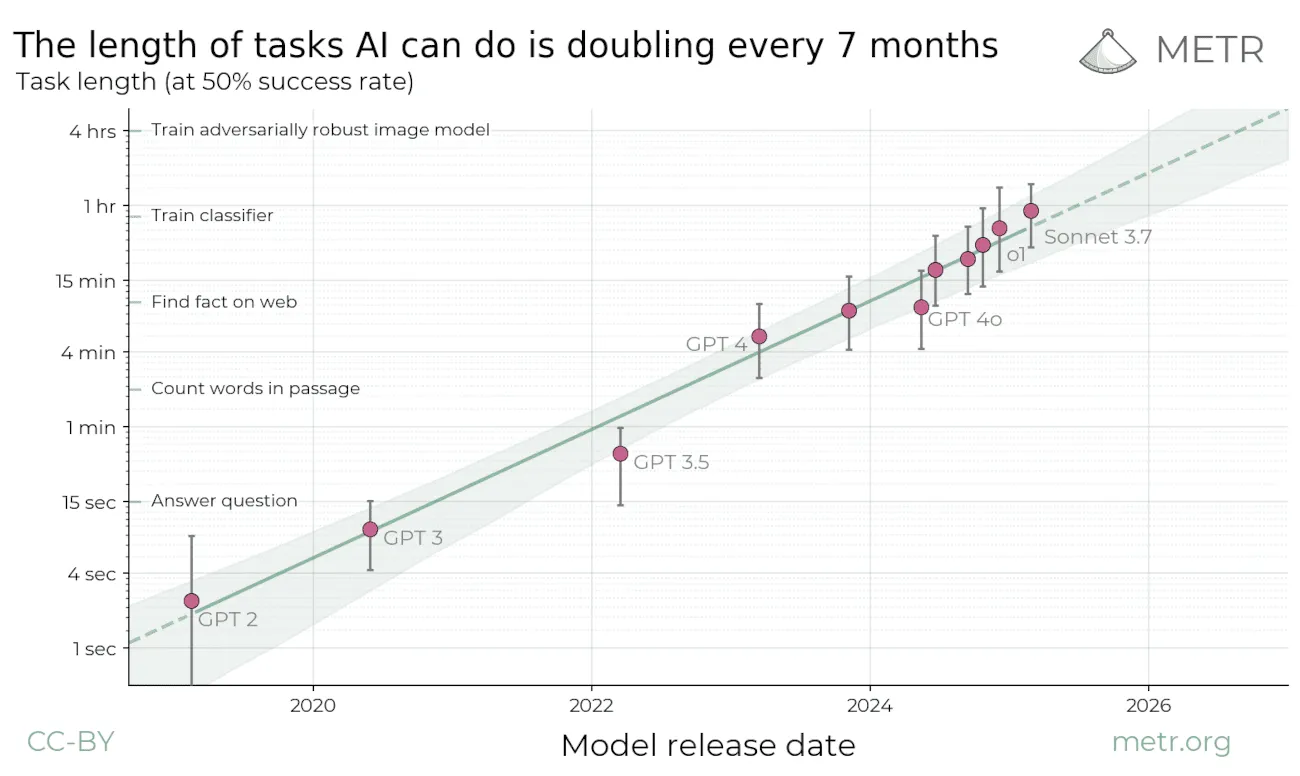

Evaluations on frontier AI agent systems

The Model Evaluation & Threat Research (METR) team runs evaluations on frontier AI agent systems to complete complex tasks without human input. They claim that AI systems will probably be able to do most of what humans can do, including developing new technologies; starting businesses and making money.

Their research measures the length of tasks (by how long they take human professionals) that frontier model agents can complete autonomously with 50% reliability. They have seen this length doubling approximately every 7 months for the last 6 years! Further details in their published paper.

Source: METR team

Source: METR team

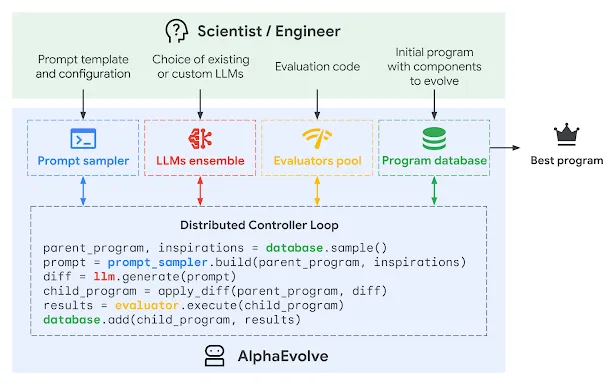

AlphaEvolve: An agent for designing advanced algorithms

AlphaEvolve is a coding agent by DeepMind for general-purpose algorithm discovery and optimisation, that often outperform human-crafted solutions. It combines the creativity of LLMs with automated evaluators.

Across a selection of 50 open mathematical problems, the model was able to rediscover state-of-the-art solutions 75% of the time and discovered improved solutions 20% of the time. It also helped optimise Google’s infrastructure by improving data center scheduling (recovering 0.7% of compute power), boosting kernel performance (e.g., FlashAttention speed by up to 32.5%), and aiding chip/TPU design. More details in their paper.

Source: AlphaEvolve blog post

Source: AlphaEvolve blog post

Conclusion

From perceptrons and backpropagation to ChatGPT and autonomous agents, the arc of AI progress reveals a steady climb toward increasingly capable, self-directed systems. LLM agents represent a pivotal shift. Models that not only generate language but also wield tools, remember context, and act in dynamic environments.

Agents are rapidly evolving into general-purpose collaborators, capable of co-inventing technologies, optimising real-world systems, and operating autonomously across domains. With task complexity doubling every 7 months, they may soon drive breakthroughs in science, business, infrastructure, and LLM research itself.

Ensuring alignment, safety, and meaningful oversight will be critical as we move into this new era of intelligent, thinking machines.